Unveiling the Convergence of Foundation Models Towards a Unified Reality

Insights from the paper: 'Platonic Representation Hypothesis'

In the past 2 years, we have all witnessed the remarkable evolution of large AI models. As both a witness to and an applier of these developments, I was planning to start my blogs by sharing insights and learnings from my experiences with LLM and generative AI. Topics I intended to cover included the current gaps between large models and real humans in areas such as the methods and challenges of implementing RAG, effective chunking methods, and ways to enhance RAG's retrieval capabilities, and more.

However, after recently reading the paper "The Platonic Representation Hypothesis," (https://arxiv.org/abs/2405.07987) which caught my attention in the first place because Ilya Sutskever liked it, I felt compelled to share my thoughts on this intriguing topic first.

What does this paper is about? "...as vision models and language models get larger... We hypothesize that this convergence is driving toward a shared statistical model of reality, akin to Plato’s concept of an ideal reality." The "Platonic Representation Hypothesis" utilizes kernel alignment metrics, a method that quantifies similarities across models, to support its claim that the representations of different large models tend to converge. This can be understood as follows: regardless of the type of large model, as long as the scale is sufficient and they are properly trained, their methods of interpreting and predicting the world will be consistent. Just imagine a group of people who have read all the books, traveled to all places, performed all tasks, and experienced everything; their understanding of the world would then be the same. This convergence among large AI models suggests an underlying pattern: when sufficiently trained, large models may not only mimic but also understand the real world in a unified way. Isn’t that fascinating?

A few thoughts after reading:

“Allegory of the Cave": In this scenario, prisoners interpret shadows as reality. Once one of them is freed to the outside world, they realize these shadows are just reflections.

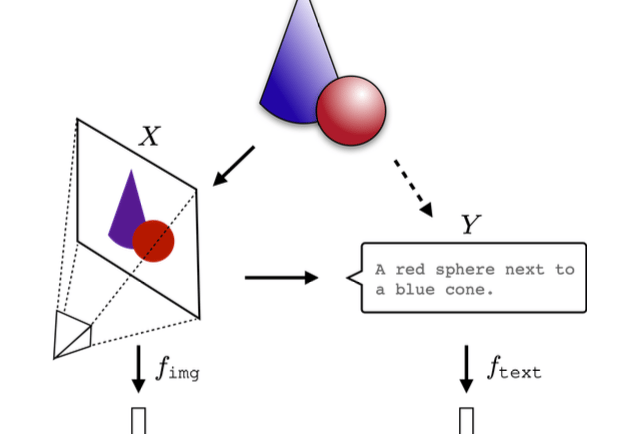

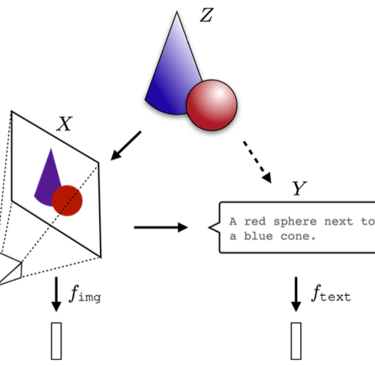

This concept is illustrated in the paper’s first figure - see picture above. Images X and text Y are projections of the real world Z into people's minds, and the representation learning algorithms of large models are expected to converge on a unified representation of Z. The increase in model size, and the diversity of data and tasks, are key drivers of this convergence. Essentially, AIs are learning everything from various human-visible projections: text, language, images, etc., all of which are projections of the real world.

"Unified Reality": The paper explores a general trend of representational convergence. It finds that in different neural network models, which span different architectures, training data, and even modalities, the general trend is that more capable language models align better with more capable vision models. The behavior of a model is not determined by its architecture, parameters, or optimizers; it's determined by the training corpus. In fact, the accepted practice is that if you want to train the best vision model, you should train not just on images but also on sentences. Conversely, if you want to build the best LLM, you should also consider training it on image data.

"Big and Simple": Larger models are more likely to share a common representation than smaller ones. As we train larger models that solve more tasks at once, we should expect fewer possible solutions. "Deep networks are biased toward finding simple fits to the data, and the bigger the model, the stronger the bias. Therefore, as models get bigger, we should expect convergence to a smaller solution space." The paper notes that larger models have more parameters and computational power, allowing them to explore more ways to fit the same dataset ("the same reality"), but they always tend to favor simple solutions. This way, the model can better solve problems, especially ones it has never seen before.

One more thought… The paper also mentioned 'Convergent Realism'. According to this view, although we can't be 100% sure how the real world operates, our understanding of the real world through scientific research will gradually converge to the truth. Thus, human cognition converges to the truth, large models converge to human cognition, and theoretically, large models can converge to the truth. Ok, great, but wait, is that all? Returning to the 'Allegory of the Cave,' when a prisoner goes outside, can they really discover the ideal reality? How do we know that the perfect forms are not just the result of human brain processing projections? Is reality really as we think it is? Apparently, there is 'lots left to explain'—both technically and philosophically. One thing is for sure: we should never stop pursuing the truth and essence.